In a short video yesterday, Google's Matt Cutts told webmasters and SEOs not to use article directory sites for link building strategies.

Well, he kind of said not to use it and hinted to it at the end by saying:

Here is the video:

Free Directory List, Free Article Directory List, Free Social Bookmarking Websites List, Instant Approval Directories..

Today, we are taking a first step of many to improve categories that merchants can use to represent their businesses. Specifically, we’re adding over 1,000 new categories in the new Places dashboard. These categories are available globally and translated to every language Google supports.

1) What is the sure proof way to make sure a link is 100% bad? 2) I don't want to remove all links cause I am worried my site will drop even more. I'm sure there are some semi-good links that might be helping.It is sad, indeed. But you need to disavow the links, that is for sure. Those links are not helping you and they are now hurting you. Remove the hurt. Then get people to link to you because they want to link to you.

3) After submitting disavow file, typically how long does it take to recover? We have two sites, one seems to be under penguin and panda updates and the other received a manual penalty for certain bad links for certain terms.

So Google now allows you to "tag" an author in your content. Good authors who are popular get extra ranking bonuses for their articles.So it seems very simple to me. Find a popular author in your niche, and tag him in your links to your content.

Extra link juice off someone else's work.

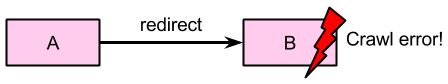

I've seen this come up more since custom/vanity URLs for Google+ profiles have become more popular. Authorship works fine with vanity profile URLs, it works fine with the numeric URLs, and it doesn't matter if you link to your "about" or "posts" page, or even just to the base profile URL. The type of redirect (302 vs 301) also doesn't matter here. If you want to have a bit of fun, you can even use one of the other Google ccTLDs and link to that.So there you have it, concrete information from Google on one of the scary topics to touch on.